Identifying the Source of Network Traffic Originating from Amazon EKS Clusters

Table of Contents

Introduction

If you run workloads in Amazon EKS, you might have noticed a peculiar behavior: when apps in EKS pods communicate outbound with other servers, target servers “see” network traffic coming from the EKS node IP, not the pod IP. This article explains why this behavior exists & how to change it if required.

Scenario

Assuming you’re using VPC CNI, this behavior occurs only if your EKS nodes are in private subnets & the target servers are in VPCs connected to the EKS VPC by peering, transit gateway, direct connect, etc.

When EKS pods communicate with servers in the same VPC, the target servers will “see” the pod IP, not the node IP.

And when EKS pods in private subnets with NAT gateways, communicate with public servers on the internet, the target servers will see the traffic coming from the NAT gateway, as is the case for any non-EKS private AWS workload.

Source NAT

By default, each pod in your cluster is assigned a private IP from its VPC’s CIDR. Pods in the same VPC communicate with each other using these private IPs. When a pod communicates to any IP that isn’t within its VPC, the VPC CNI plugin translates the pod’s IP to the pod’s node’s primary ENI’s primary IP. This is why a server running in a VPC connected to the EKS VPC sees the node IP, not the pod IP.

The only exception to this “source NAT” behavior is if a pod’s spec contains hostNetwork: true. Then its IP isn’t translated to a different address. This is the case for the kube-proxy & VPC CNI EKS-managed plugins. For pods of these plugins, their IP is the same as their node’s primary IP, so the pod’s IP isn’t translated.

For more details, see Source NAT for EKS Pods.

External SNAT

VPC CNI’s source NAT behavior can be disabled when there is an external NAT gateway available to translate IPs.

To enable external SNAT:

kubectl set env daemonset -n kube-system \

aws-node AWS_VPC_K8S_CNI_EXTERNALSNAT=trueIf you manage your EKS with Terraform, the EKS addon resource would look like this:

data "aws_eks_addon_version" "vpc_cni" {

addon_name = "vpc-cni"

kubernetes_version = aws_eks_cluster.eks.version

most_recent = true

}

resource "aws_eks_addon" "vpc_cni" {

cluster_name = aws_eks_cluster.eks.name

addon_name = "vpc-cni"

addon_version = data.aws_eks_addon_version.vpc_cni.version

preserve = false

resolve_conflicts_on_create = "OVERWRITE"

resolve_conflicts_on_update = "OVERWRITE"

service_account_role_arn = aws_iam_role.vpc_cni.arn

configuration_values = jsonencode({

env = { AWS_VPC_K8S_CNI_EXTERNALSNAT = "true" }

})

}For more details, see AWS_VPC_K8S_CNI_EXTERNALSNAT.

Testing SNAT

To see the external SNAT behavior take effect, curl NGINX servers hosted in the same VPC, a connected VPC & the public internet, from an EKS pod, before & after enabling external SNAT.

Same VPC

The target server in this case can either be another pod in the same EKS cluster or any server outside the cluster in the same VPC.

When you curl the server IP/URL from an EKS pod, you’ll see the pod’s private IP show up in the server logs, both before & after enabling external SNAT:

10.75.59.171 - - [10/Sep/2023:14:23:11 +0000] "GET / HTTP/1.1" 200 4833 "-" "curl/7.66.0" "-"Connected VPC

This is where external SNAT makes a difference.

Before enabling external SNAT, the target server sees the node IP:

10.75.49.241 - - [10/Sep/2023:15:35:09 +0000] "GET / HTTP/1.1" 200 4833 "-" "curl/7.66.0" "-"After enabling external SNAT, the target server sees the pod IP:

10.75.59.171 - - [10/Sep/2023:15:38:15 +0000] "GET / HTTP/1.1" 200 4833 "-" "curl/7.66.0" "-"Public Internet

Servers on the public internet always see your NAT gateway’s public IP. They’re unaffected by external SNAT.

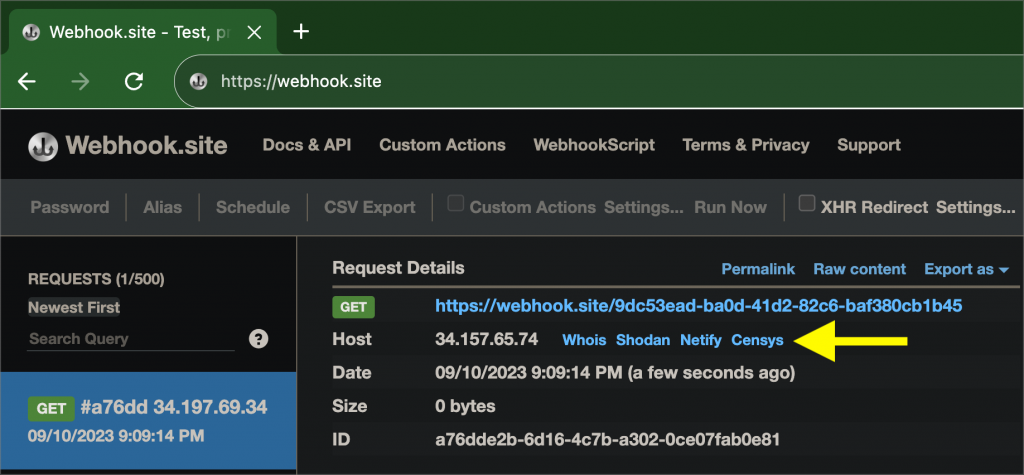

A quick way to test this, is to use a webhook from webhook.site, instead of spinning up a server. curl the webhook from an EKS pod, before & after enabling external SNAT, & in both cases, the webhook will see the NAT gateway IP:

Conclusion

This article demonstrates how disabling VPC CNI’s source NAT behavior, can be useful in cases where, identifying the exact source of a network request originating from an EKS cluster, is important within a company’s private network.

For example, consider apps in EKS pods authenticating against your self-hosted Active Directory servers, accessing files in central FTP/NFS servers, or using a centrally-hosted API gateway like Kong.

About the Author ✍🏻

Harish KM is a Principal DevOps Engineer at QloudX. 👨🏻💻

With over a decade of industry experience as everything from a full-stack engineer to a cloud architect, Harish has built many world-class solutions for clients around the world! 👷🏻♂️

With over 20 certifications in cloud (AWS, Azure, GCP), containers (Kubernetes, Docker) & DevOps (Terraform, Ansible, Jenkins), Harish is an expert in a multitude of technologies. 📚

These days, his focus is on the fascinating world of DevOps & how it can transform the way we do things! 🚀