Dimensioning & Costing AWS Workloads using AWS Cost & Usage Reports (CUR)

Table of Contents

- Introduction

- Create AWS Cost & Usage Reports (CUR)

- Activate Cost Allocation Tags

- Configure Amazon Athena to Query CUR

- Example — Measure Workload Cost

- CUR Fields of Interest

- Conclusion

- References

- About the Author ✍🏻

Introduction

This article describes an approach for correlating the business outcome of an AWS workload with the cost of its AWS resources.

The end goal of this activity is to arrive at a point where, given the input parameters of a workload (like data ingested), we should be able to predict the cost of AWS resources needed to run this workload.

We begin by running the workload several times, with varying input parameters. Each time, we measure both the input metrics of the workload, like amount of data ingested & the AWS bill it generates.

Eventually, we arrive at a correlation like this:

| Workload | Input Metric #1 | Input Metric #2 | Input Metric #3 | Total Cost of All AWS Resources Involved |

|---|---|---|---|---|

| Workload Run #1 | M1a | M2a | M3a | C1 |

| Workload Run #2 | M1b | M2b | M3b | C2 |

| Workload Run #3 | M1c | M2c | M3c | C3 |

Different AWS services are used to gather this data:

| Data | Service |

|---|---|

| Input Metrics | Standard CloudWatch service-level metrics or custom business-oriented metrics, like orders processed |

| AWS Cost | AWS Cost & Usage Reports |

Create AWS Cost & Usage Reports (CUR)

The AWS CUR contains the most comprehensive set of cost & usage data available. Reporting can be enabled on an account or organization-level. Reports can be configured to breakdown costs by the hour. Once enabled, the first report might take up to 24 hours to be delivered. Reports are delivered into an S3 bucket you choose. Reports are updated at least once a day & at most thrice a day.

Follow these steps to enable CUR:

Open https://console.aws.amazon.com/billing/home#/reports/create

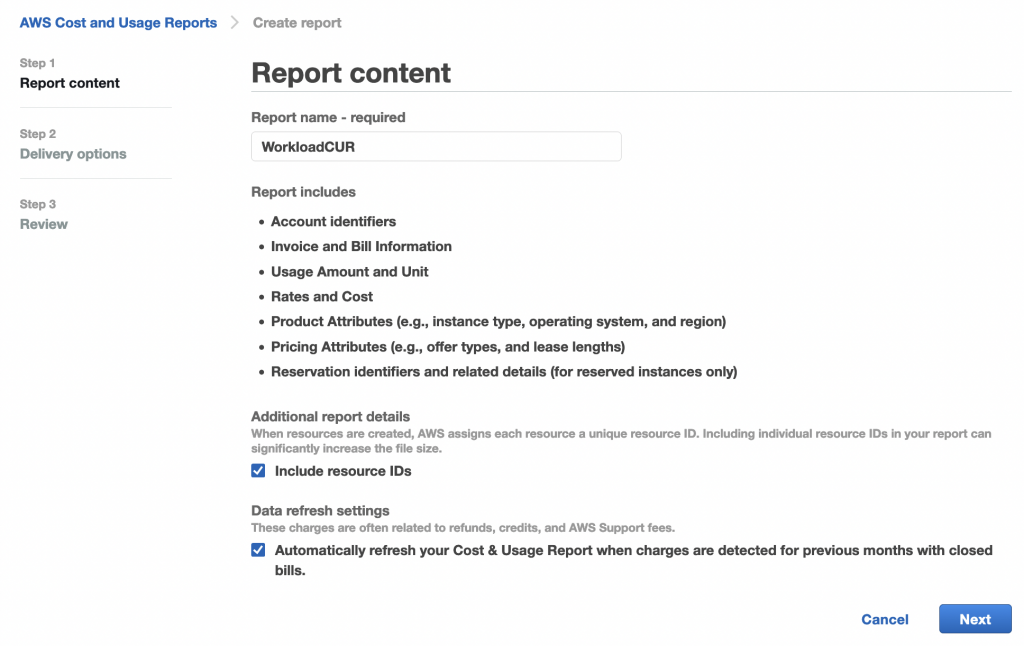

Provide a name for the report & select “include resource IDs” & auto-refresh:

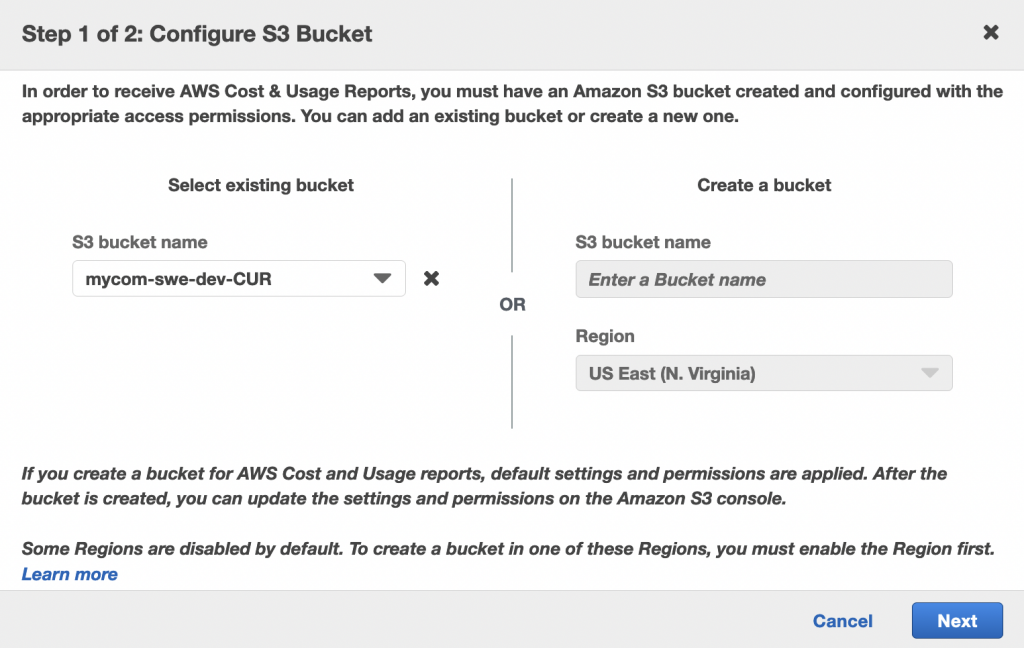

On the next screen, click Configure to configure the S3 bucket where the reports will be delivered.

You can either create a new bucket or choose an existing one:

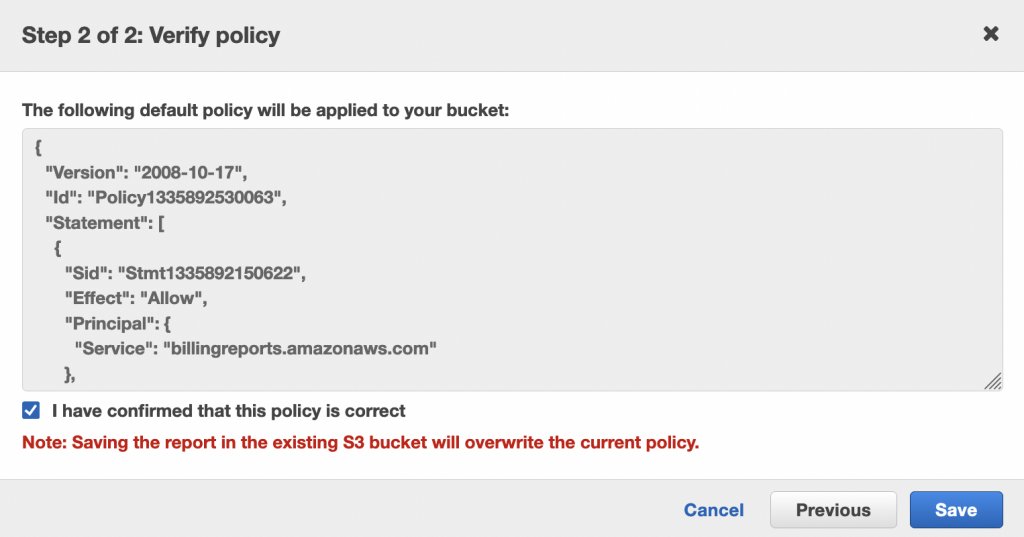

AWS will generate a bucket policy for this bucket, to allow AWS billing to put reports in it:

{

"Version": "2008-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "billingreports.amazonaws.com"

},

"Action": [

"s3:GetBucketAcl",

"s3:GetBucketPolicy"

],

"Resource": "arn:aws:s3:::my-cur-bucket",

"Condition": {

"StringEquals": {

"aws:SourceArn": "arn:aws:cur:us-east-1:123456789012:definition/*",

"aws:SourceAccount": "123456789012"

}

}

},

{

"Effect": "Allow",

"Principal": {

"Service": "billingreports.amazonaws.com"

},

"Action": [

"s3:PutObject"

],

"Resource": "arn:aws:s3:::my-cur-bucket/*",

"Condition": {

"StringEquals": {

"aws:SourceArn": "arn:aws:cur:us-east-1:123456789012:definition/*",

"aws:SourceAccount": "123456789012"

}

}

}

]

}Verify the policy & save:

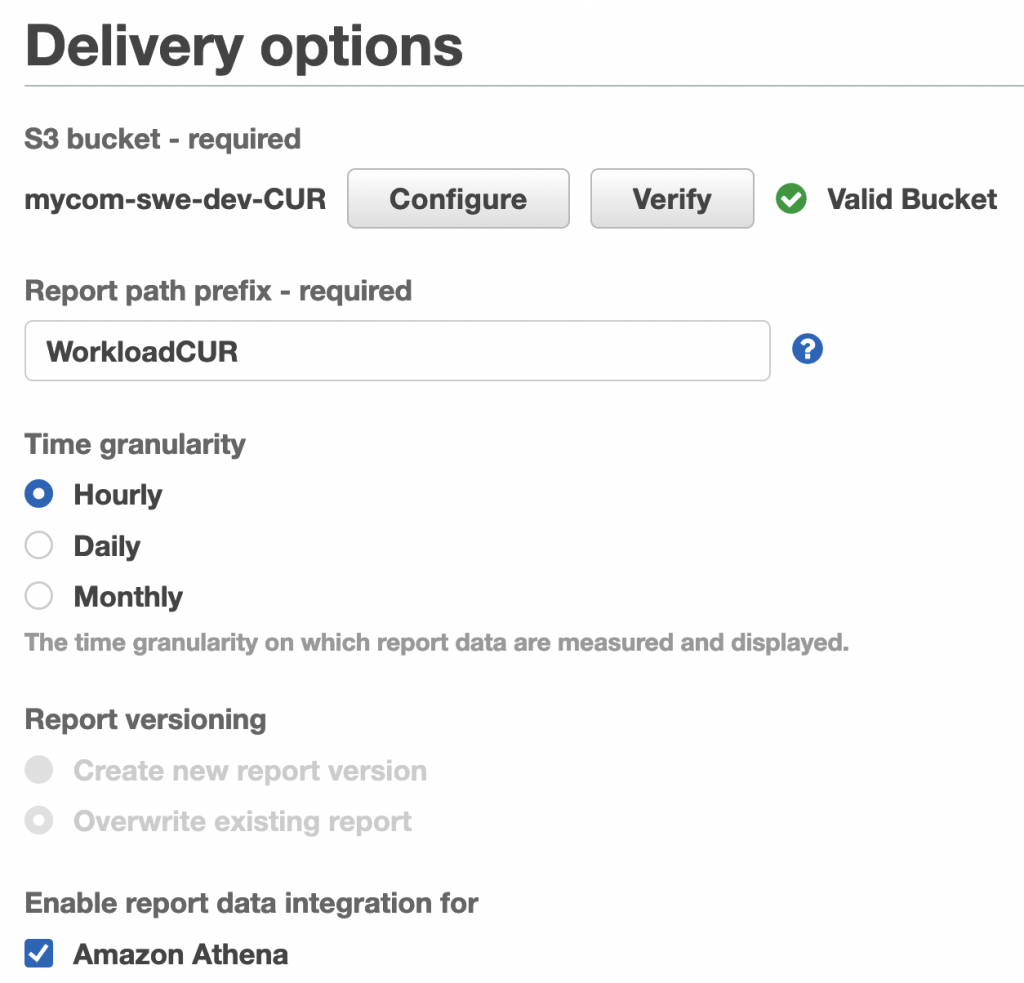

Provide a report path prefix, select hourly granularity & enable Athena:

Complete the CUR creation wizard.

Activate Cost Allocation Tags

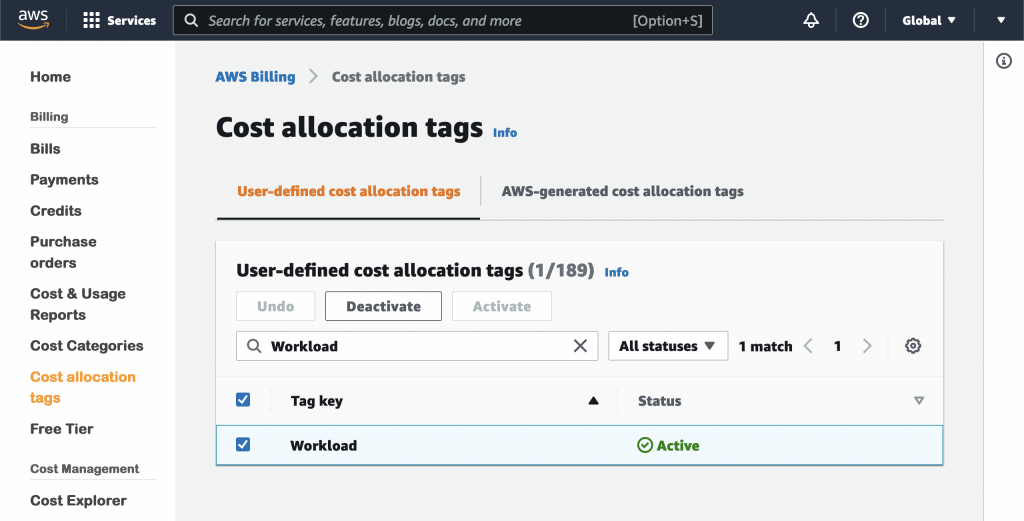

Since an AWS account could be running several workloads at any given time, one way to isolate a single instance of a workload is to tag all resources of that workload with a unique value. However, in order for any tags to appear in CUR, they must be activated as cost allocation tags. To do so:

Open https://console.aws.amazon.com/billing/home#/tags

If you’re using AWS Organizations, cost allocation tags can only be enabled in the master account.

Cost allocation tags enabled in the master account will show up in CURs in member accounts.

Search for the tag you’ll be using for your workloads, select & activate it:

You cannot add a new tag to the cost allocation tags console. The console lists tags that already exist in your account/organization. You must select & activate one of them.

If the tag you wish to use is not listed here, apply it to a resource temporarily & wait a few hours for it to show up here. You can then activate it.

Configure Amazon Athena to Query CUR

A CUR report can be sizeable, even for small/medium sized accounts. Amazon Athena can be used to query it using SQL to fetch the information we need after running a workload.

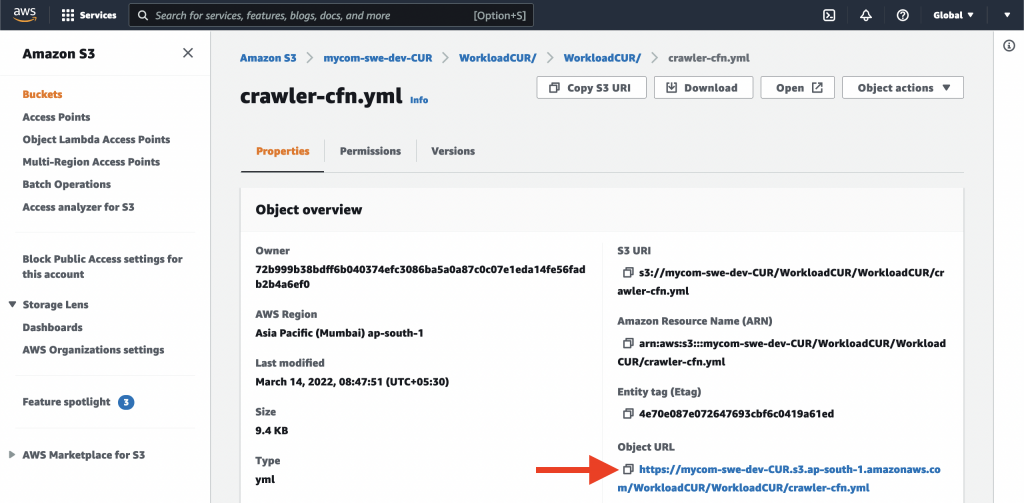

To configure Athena, we use a CloudFormation template generated by AWS when we configured CUR. Start by opening the CUR bucket & look for crawler-cfn.yml. Copy its Object URL:

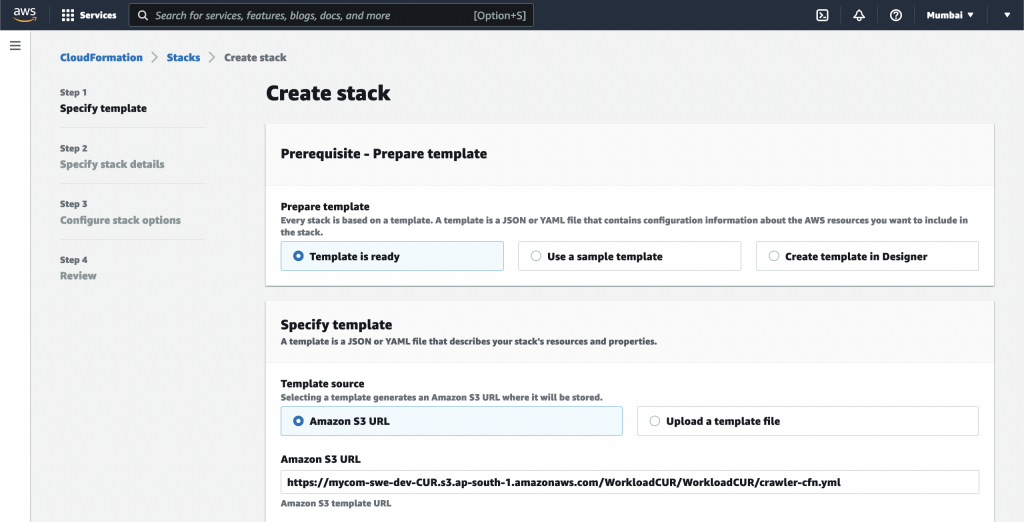

Next, open https://console.aws.amazon.com/cloudformation/home#/stacks/create/template

Create a CloudFormation stack using the object URL:

The stack creates:

- An AWS Glue database, table & crawler

- A Lambda function to trigger the Glue crawler every time AWS creates or updates CUR in the bucket

- The S3 event trigger for the Lambda function

- The associated Lambda permissions & IAM roles & policies

Example — Measure Workload Cost

Everything we have done so far was a one-time setup activity as a prerequisite to the “costing workloads” activity. Now let’s see how to use this setup to measure AWS costs for a workload.

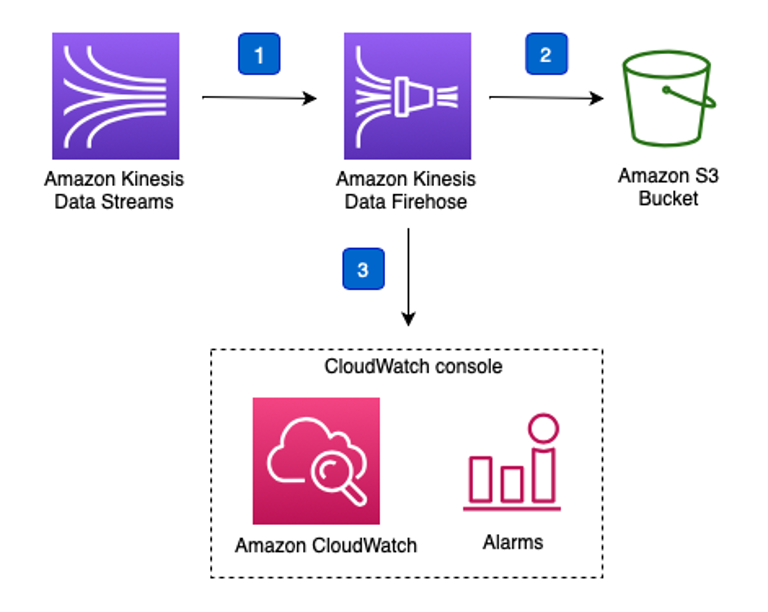

As an example workload, we will use “option 3” of an AWS-provided streaming data solution:

AWS Streaming Data Solution for Amazon Kinesis

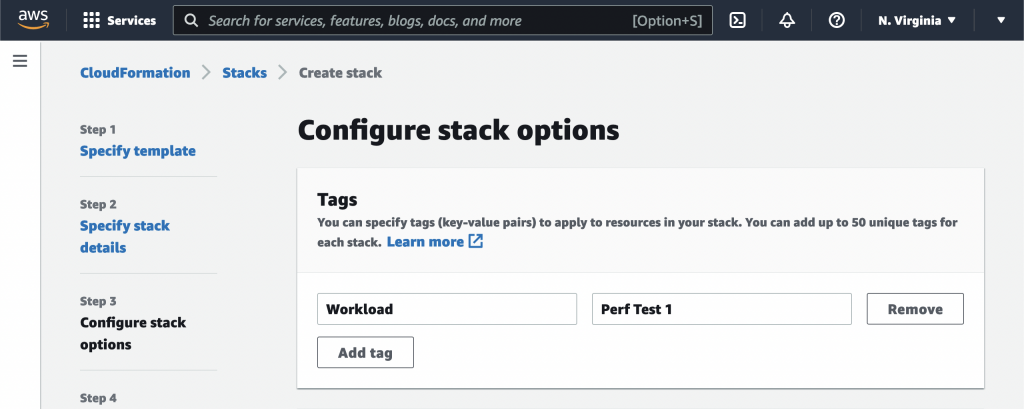

Launch the provided CloudFormation template. Ensure you tag the workload:

We can now use the Kinesis Data Generator (KDG) to simulate incoming data to the workload.

Leave the KDG running for a while. Watch the Kinesis CloudWatch metrics.

After this simulated workload has run for a while, we need to wait a few hours for the CUR to be updated.

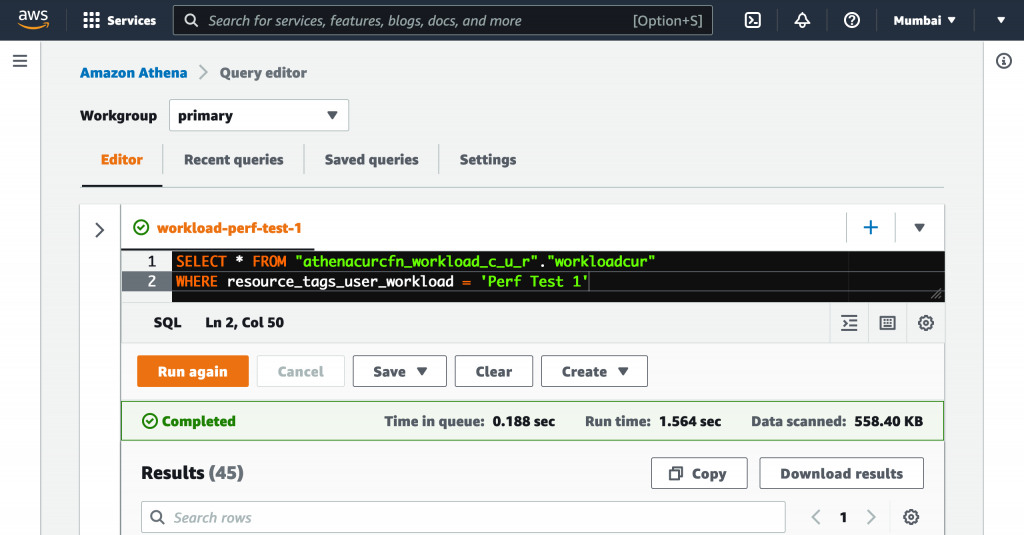

After the CUR has updated, query Athena to see the cost records associated with this workload:

SELECT * FROM "athenacurcfn_workload_c_u_r"."workloadcur"

WHERE resource_tags_user_workload = 'Perf Test 1'

To see the total cost of the workload, run:

SELECT SUM(line_item_unblended_cost)

FROM "athenacurcfn_workload_c_u_r"."workloadcur"

WHERE resource_tags_user_workload = 'Perf Test 1'CUR Fields of Interest

| Field | Description |

|---|---|

| line_item_usage_account_id | The AWS account ID that consumed the resource |

| line_item_usage_start_date line_item_usage_end_date | Start & end time for which the current line item captures the cost. This is usually the start & end of an hour for reports with hourly granularity. |

| line_item_product_code | The AWS service that was consumed. EG: AmazonKinesis |

| line_item_usage_type | The metric of the resource used for billing. EG: Storage-ShardHour & PutRequestPayloadUnits for Kinesis |

| line_item_resource_id | The unique resource identifier. EG: KDS ARN, S3 bucket name |

| line_item_usage_amount | The quantity of resource used is relation to line_item_usage_type. EG: 1 ShardHour for KDS |

| line_item_unblended_cost line_item_blended_cost | See: Why does the “blended” annotation appear on some line items in my AWS bill? Understanding your AWS Cost Datasets: A Cheat Sheet |

| line_item_line_item_description | Human-readable description of the charge. Examples: > $0.015 per provisioned shard-hour (Kinesis) > $0.005 per 1,000 PUT, COPY, POST, or LIST requests (S3) > AWS Lambda – Requests Free Tier – 1,000,000 Requests – US East (Northern Virginia) |

| pricing_public_on_demand_cost | The public price of the resource. You pay less based on various factors like credits, reservations & savings plans, agreements with AWS, support fees, etc |

| resource_tags_user_YOUR-TAG | The cost allocation tags you activate |

For detailed description of all CUR fields, see Data dictionary.

To create a correlation between the workload inputs & its cost, we can use a combination of CloudWatch metrics like PutRecordsBytes / IncomingBytes for a Kinesis stream & relevant fields from the CUR like line_item_usage_amount & line_item_blended_cost.

Conclusion

This article describes how you can use the AWS CUR to gain detailed insights into your AWS billing. Although it may not be practical to use the CUR directly for larger workloads, understanding it will give you an appreciation of how other tools & services in the market use the CUR as their data source & provide actionable insights.

References

The AWS Cost and Usage Report (CUR)

Configure Cost and Usage Reports

What are AWS Cost and Usage Reports?

About the Author ✍🏻

Harish KM is a Principal DevOps Engineer at QloudX. 👨🏻💻

With over a decade of industry experience as everything from a full-stack engineer to a cloud architect, Harish has built many world-class solutions for clients around the world! 👷🏻♂️

With over 20 certifications in cloud (AWS, Azure, GCP), containers (Kubernetes, Docker) & DevOps (Terraform, Ansible, Jenkins), Harish is an expert in a multitude of technologies. 📚

These days, his focus is on the fascinating world of DevOps & how it can transform the way we do things! 🚀