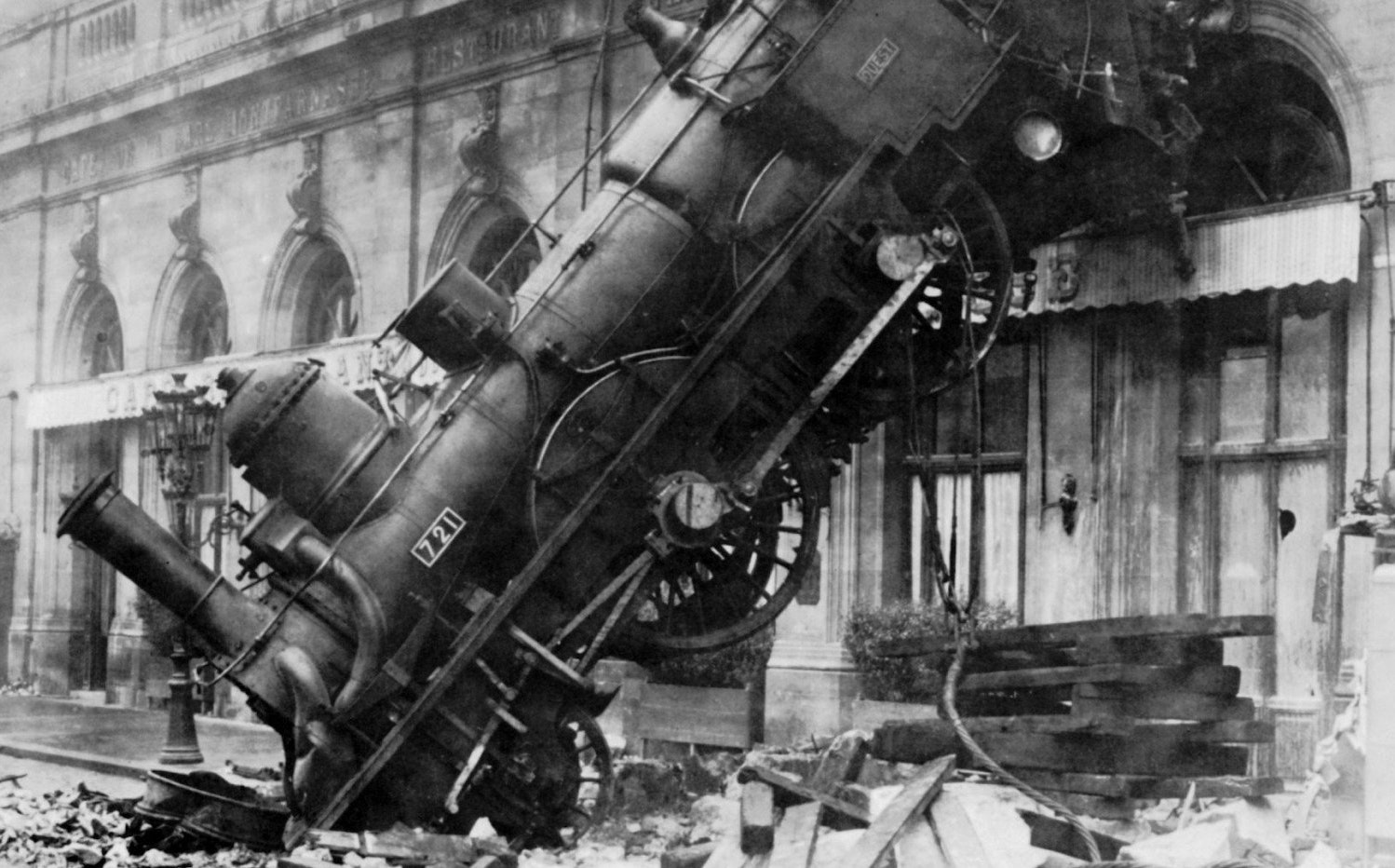

Automatically Test Your EC2 Backups to Ensure Recoverability in Case of Disasters

Backups are important! But testing backups are just as important! Backups that don’t work when restored are just as useless as not having backups at all. For this reason, many organizations conduct regular DR drills, to ensure that backups exist, and everything works when restored.

This article takes this one step further. It describes a way to automatically test your backup as soon as it’s created. In this case, we will look at an EC2 backup created by the AWS Backup service.

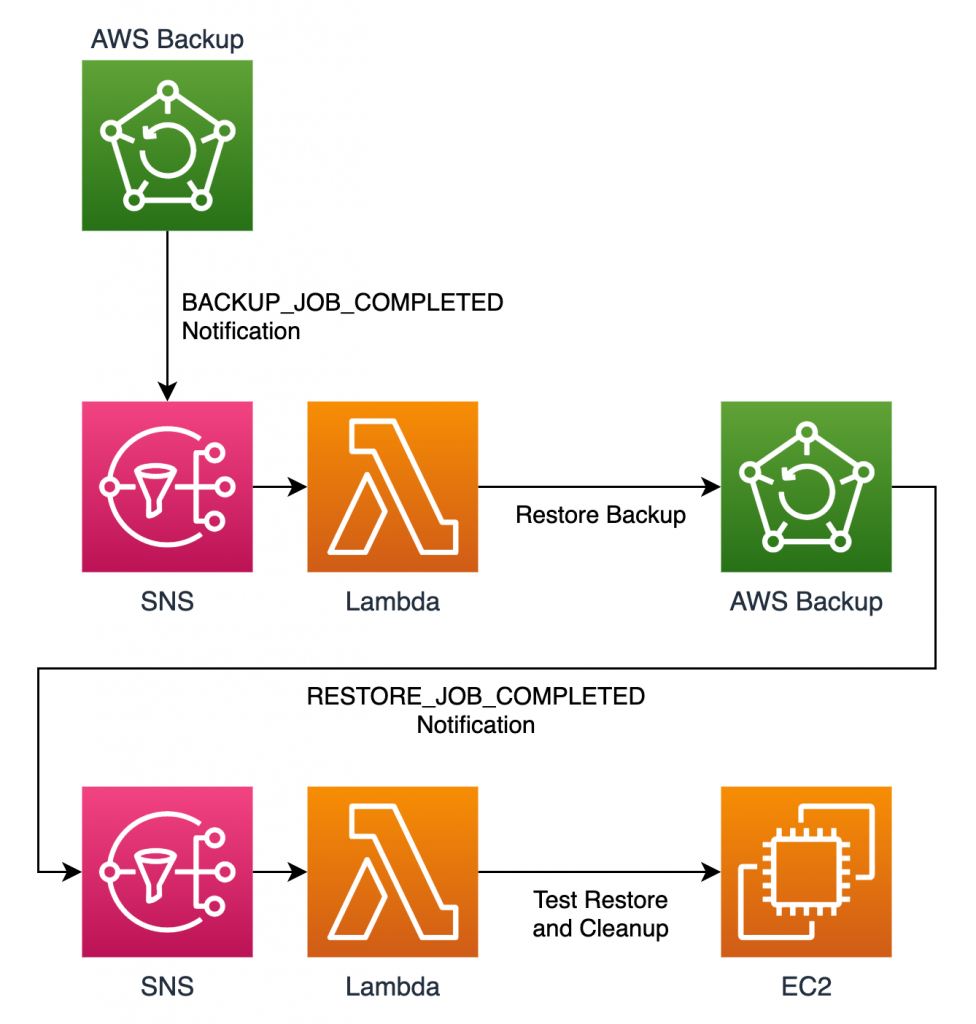

Here is the plan:

- AWS Backup creates an EC2 backup.

- It notifies SNS when the backup is done.

- SNS invokes a Lambda function.

- The function asks AWS Backup to restore that backup.

- AWS Backup restores the backup.

- It notifies SNS when restore is done.

- SNS invokes the Lambda function again.

- The function tests the restored EC2.

- Then terminates it if everything’s OK.

Backup Notifications

The first order of business is to set up notifications for backup tasks. Backup vaults in AWS Backup can send notifications to SNS. However, you can’t configure these from the AWS console. You must use the AWS CLI:

aws backup put-backup-vault-notifications \

--backup-vault-name YOUR-BACKUP-VAULT \

--sns-topic-arn YOUR-SNS-TOPIC \

--backup-vault-events BACKUP_JOB_COMPLETED RESTORE_JOB_COMPLETEDLambda Function

Next, create the Lambda function that will handle the notifications. Here it is:

import boto3

import urllib3

backup = boto3.client('backup')

def lambda_handler(event, context):

job_type = event['Records'][0]['Sns']['Message'].split('.')[-1].split(' ')[1]

if job_type == 'Backup':

backup_job_id = event['Records'][0]['Sns']['Message'].split('.')[-1].split(':')[1].strip()

backup_info = backup.describe_backup_job(BackupJobId=backup_job_id)

resource_type = backup_info['ResourceType']

if resource_type != 'EC2':

return

backup_vault_name = backup_info['BackupVaultName']

recovery_point_arn = backup_info['RecoveryPointArn']

metadata = backup.get_recovery_point_restore_metadata(

BackupVaultName=backup_vault_name,

RecoveryPointArn=recovery_point_arn

)

iam_role_arn = backup_info['IamRoleArn']

metadata['RestoreMetadata']['CpuOptions'] = '{}'

metadata['RestoreMetadata']['NetworkInterfaces'] = '[]'

backup.start_restore_job(

RecoveryPointArn=recovery_point_arn,

IamRoleArn=iam_role_arn,

Metadata=metadata['RestoreMetadata']

)

if job_type == 'Restore':

restore_job_id = event['Records'][0]['Sns']['Message'].split('.')[-1].split(':')[1].strip()

restore_info = backup.describe_restore_job(RestoreJobId=restore_job_id)

resource_type = restore_info['CreatedResourceArn'].split(':')[2]

if resource_type != 'ec2':

return

ec2_resource_type = restore_info['CreatedResourceArn'].split(':')[5].split('/')[0]

if ec2_resource_type != 'instance':

return

ec2 = boto3.client('ec2')

instance_id = restore_info['CreatedResourceArn'].split(':')[5].split('/')[1]

instance_details = ec2.describe_instances(InstanceIds=[instance_id])

public_ip = instance_details['Reservations'][0]['Instances'][0]['PublicIpAddress']

http = urllib3.PoolManager()

try:

resp = http.request('GET', public_ip)

if resp.status == 200:

ec2.terminate_instances(InstanceIds=[instance_id])

else:

print('Validation FAILED!')

except Exception as e:

print(str(e))The first half of this function listens for backup complete events and triggers a restore when backups complete.

The second half listens for restore complete events, performs a health check on the restored EC2, and terminates it if the health check passed.

About the Author ✍🏻

Harish KM is a Principal DevOps Engineer at QloudX. 👨🏻💻

With over a decade of industry experience as everything from a full-stack engineer to a cloud architect, Harish has built many world-class solutions for clients around the world! 👷🏻♂️

With over 20 certifications in cloud (AWS, Azure, GCP), containers (Kubernetes, Docker) & DevOps (Terraform, Ansible, Jenkins), Harish is an expert in a multitude of technologies. 📚

These days, his focus is on the fascinating world of DevOps & how it can transform the way we do things! 🚀